Medicaid Managed Care

Organization

2014 Annual Technical Report

Submitted by:

Delmarva Foundation

April 2015

HealthChoice and Acute Care Administration

Division of HealthChoice Quality Assurance

2014 Annual Technical Report

Delmarva Foundation

Table of Contents

Table of Contents

Executive Summary ............................................................................................................................................ i

Introduction ............................................................................................................................................... i

HACA Quality Strategy............................................................................................................................. ii

EQRO Program Assessment Activities ................................................................................................ iii

General Overview of Findings ............................................................................................................... iii

Assessment of Quality, Access, and Timeliness ........................................................................... iii

Recommendations and Corrective Action Plans for MCOs ........................................................ vi

Best and Emerging Practice Strategies .............................................................................................. ix

I. Systems Performance Review ............................................................................................................... 1

Introduction .............................................................................................................................................. 1

Purpose ..................................................................................................................................................... 1

Methodology ............................................................................................................................................. 1

Corrective Action Plan Process .............................................................................................................. 3

Findings ..................................................................................................................................................... 4

Conclusions ............................................................................................................................................ 26

II. Value Based Purchasing ...................................................................................................................... 27

Introduction ........................................................................................................................................... 27

Performance Measure Selection Process ......................................................................................... 27

Value Based Purchasing Validation ................................................................................................... 28

2013 VBP Incentive/Disincentive Target Setting Methodology .................................................... 32

2013 Value Based Purchasing Results ............................................................................................. 34

2013 VBP Financial Incentive and Disincentive Methodology ...................................................... 35

III. Performance Improvement Projects ................................................................................................. 36

Introduction ........................................................................................................................................... 36

Methodology .......................................................................................................................................... 37

Findings .................................................................................................................................................. 38

Recommendations ............................................................................................................................... 42

IV. Encounter Data Validation .................................................................................................................. 43

Introduction ........................................................................................................................................... 43

Encounter Data Validation Process ................................................................................................... 43

Medical Record Review Procedure .................................................................................................... 44

Analysis Methodology .......................................................................................................................... 45

Medical Record Sampling ................................................................................................................... 46

Results .................................................................................................................................................... 48

Conclusions and Recommendations ................................................................................................ 53

2014 Annual Technical Report

Delmarva Foundation

Table of Contents

V. EPSDT Medical Record Review ........................................................................................................... 54

Introduction ........................................................................................................................................... 54

Program Overview ................................................................................................................................ 54

Program Objectives .............................................................................................................................. 55

2013 EPSDT Review Process ............................................................................................................. 56

Findings .................................................................................................................................................. 58

Corrective Action Plan Process ........................................................................................................... 69

Conclusions ............................................................................................................................................ 69

VI. Healthcare Effectiveness Data and Information Set

®

.................................................................... 71

Introduction ........................................................................................................................................... 71

Measures Designated for Reporting .................................................................................................. 72

HEDIS

®

Methodology ............................................................................................................................ 77

Findings .................................................................................................................................................. 86

VII. Consumer Assessment of Health Providers and Systems

®

........................................................... 89

Introduction ........................................................................................................................................... 89

2014 CAHPS

®

5.0H Medicaid Survey Methodology ........................................................................ 89

Findings .................................................................................................................................................. 92

VIII. Consumer Report Card ........................................................................................................................ 99

Introduction ........................................................................................................................................... 99

Information Reporting Strategy .......................................................................................................... 99

Analytic Methodology ......................................................................................................................... 103

2014 Report Card Results................................................................................................................. 105

IX. Review of Compliance with Quality Strategy ................................................................................. 106

Recommendation for MCOs .............................................................................................................. 107

Recommendations for HACA ............................................................................................................ 107

Conclusion ............................................................................................................................................ 107

Appendices

Acronym List ....................................................................................................................................... A1-1

Adolescent Well Care (AWC) HEDIS

®

Specifications ................................................................... A2-1

Controlling High Blood Pressure (CBP) HEDIS

®

Specifications ................................................. A3-1

HEDIS

®

Result Tables ........................................................................................................................ A4-1

CY 2014 MD HealthChoice Performance Report Card ............................................................... A5-1

2014 Annual Technical Report

Delmarva Foundation

i

Executive Summary

Introduction

The Maryland Department of Health and Mental Hygiene (DHMH) is responsible for evaluating the quality

of care provided to eligible participants in contracted Managed Care Organizations (MCOs) through the

Maryland Medicaid Managed Care Program, known as HealthChoice. HealthChoice has been operational

since June 1997 and operates pursuant to Title 42 of the Code of Federal Regulations (CFR), Section 438.204

and the Code of Maryland Regulations (COMAR) 10.09.65. HealthChoice’s philosophy is based on providing

quality health care that is patient-focused, prevention-oriented, comprehensive, coordinated, accessible, and

cost-effective.

DHMH’s HealthChoice and Acute Care Administration (HACA) is responsible for coordination and

oversight of the HealthChoice program. HACA ensures that the initiatives established in 42 CFR 438,

Subpart D are adhered to and that all MCOs that participate in the HealthChoice program apply these

principles universally and appropriately. The mission of HACA is to continuously improve both the clinical

and administrative aspects of the HealthChoice Program. The functions and infrastructure of HACA support

efforts to identify and address quality issues efficiently and effectively. There is a systematic process where

DHMH identifies both positive and negative trends in service delivery and outcomes. Quality monitoring,

evaluation, and education through enrollee and provider feedback are integral parts of the managed care

process and help to ensure that health care is not compromised.

DHMH is required to annually evaluate the quality of care provided to HealthChoice participants by

contracting MCOs. In adherence to Federal law [Section 1932(c)(2)(A)(i) of the Social Security Act], DHMH

is required to contract with an External Quality Review Organization (EQRO) to perform an independent

annual review of services provided by each contracted MCO to ensure that the services provided to the

participants meet the standards set forth in the regulations governing the HealthChoice Program. For this

purpose, DHMH contracts with Delmarva Foundation to serve as the EQRO.

Delmarva Foundation is a non-profit organization established in 1973 as a Professional Standards Review

Organization. Over the years, the company has grown in size and in mission. Delmarva Foundation is

designated by the Centers for Medicare and Medicaid Services (CMS) as a Quality Improvement Organization

(QIO)-like entity and performs External Quality Reviews and other services to State of Maryland and

Medicaid agencies in a number of jurisdictions across the United States. The organization has continued to

build upon its core strength to develop into a well-recognized leader in quality assurance and quality

improvement.

2014 Annual Technical Report

Delmarva Foundation

ii

Delmarva Foundation is committed to supporting the Department’s guiding principles and efforts to provide

quality and affordable health care to its burgeoning population of Medicaid recipients. As the EQRO,

Delmarva Foundation maintains a cooperative and collaborative approach in providing high quality, timely,

and cost-effective services to the Department. Delmarva Foundation’s goal is to assist the Department in this

challenging economic environment.

The HealthChoice program served over 910,232 participants as of December 31, 2013 and contracted with

seven MCOs during this evaluation period. The seven MCOs evaluated during this period were:

AMERIGROUP Community Care (ACC)

Priority Partners (PPMCO)

Jai Medical Systems, Inc. (JMS)

Riverside Health of Maryland (RHMD)

Maryland Physicians Care (MPC)

UnitedHealthcare (UHC)

MedStar Family Choice, Inc. (MSFC)

RHMD began participating in the HealthChoice program in February 2013. The EQRO’s evaluation of

RHMD for calendar year (CY) 2013 included only the Systems Performance Review and Early and Periodic

Screening, Diagnosis, and Treatment (EPSDT) Medical Record Reviews, as the MCO did not have a full year

of participation in the HealthChoice system. Their participation in all EQRO activities will begin in CY 2015.

Pursuant to 42 CFR 438.364, this Annual Technical Report describes the findings from Delmarva

Foundation’s External Quality Review activities for years 2012-2013 which took place in CY 2014. The report

includes each review activity conducted by Delmarva Foundation, the methods used to aggregate and analyze

information from the review activities, and conclusions drawn regarding the quality, access, and timeliness of

healthcare services provided by the HealthChoice MCO.

HACA Quality Strategy

The overall goals of the Department’s Quality Strategy are to:

Ensure compliance with changes in Federal/State law and regulation;

Improve performance over time;

Allow comparisons to national and state benchmarks;

Reduce unnecessary administrative burden on MCOs; and,

Assist the Department with setting priorities and responding to identified areas of concern such as

children, pregnant women, children with special healthcare needs, adults with disabilities, and adults with

chronic conditions.

HACA works collaboratively with MCOs and stakeholders to identify opportunities for improvement and to

initiate quality improvement activities that will impact the quality of health care services for HealthChoice

participants.

2014 Annual Technical Report

Delmarva Foundation

iii

EQRO Program Assessment Activities

Federal regulations require that three mandatory activities be performed by the EQRO using methods

consistent with protocols developed by the CMS for conducting the activities. These protocols specify that

the EQRO must conduct the following activities to assess managed care performance:

1) Conduct a review of MCOs’ operations to assess compliance with State and Federal standards for quality

program operations;

2) Validate State required performance measures; and

3) Validate State required Performance Improvement Projects (PIPs) that were underway during the prior

12 months.

Delmarva Foundation also conducted an optional activity: validation of encounter data reported by the

MCOs. As the EQRO, Delmarva Foundation conducted each of the mandatory activities and the optional

activities in a manner consistent with the CMS protocols during CY 2014.

Additionally, the following two review activities were conducted by Delmarva Foundation:

1) Conduct the EPSDT Medical Record Reviews; and

2) Develop and produce an annual Consumer Report Card to assist participants in selecting an MCO.

In aggregating and analyzing the data from each activity, Delmarva Foundation allocated standards and/or

measures to domains indicative of quality, access, and timeliness of care and services. Separate report sections

address each review activity and describe the methodology and data sources used to draw conclusions for the

particular area of focus. The final report section summarizes findings and recommendations to HACA and

the MCOs to further improve the quality of, timeliness of, and access to health care services for

HealthChoice participants.

General Overview of Findings

Assessment of Quality, Access, and Timeliness

For the purposes of evaluating the MCOs, Delmarva Foundation has adopted the following definitions

for quality, access, and timeliness:

Quality, as it pertains to external quality review, is defined as “the degree to which an MCO or

Prepaid Inpatient Health Plan increases the likelihood of desired health outcomes of its participants

(as defined in 42 CFR 438.320[2]) through its structural and operational characteristics and through

the provision of health services that are consistent with current professional knowledge.” ([CMS],

Final Rule: Medicaid Managed Care; 42 CFR Part 400, et. al. Subpart D- Quality Assessment and Performance

Improvement, [June 2002]).

2014 Annual Technical Report

Delmarva Foundation

iv

Access (or accessibility), as defined by the National Committee for Quality Assurance (NCQA), is “the

extent to which a patient can obtain available services at the time they are needed. Such service refers to

both telephone access and ease of scheduling an appointment, if applicable. The intent is that each

organization provides and maintains appropriate access to primary care, behavioral health care, and

member services.” (2006 Standards and Guidelines for the Accreditation of Managed Care Organizations).

Timeliness, as it relates to utilization management decisions and as defined by NCQA, is whether “the

organization makes utilization decisions in a timely manner to accommodate the clinical urgency of the

situation. The intent is that organizations make utilization decisions in a timely manner to minimize any

disruption in the provision of health care.” (2006 Standards and Guidelines for the Accreditation of Managed

Care Organizations). An additional definition of timeliness given in the Institute of Medicine National

Health Care Quality Report refers to “obtaining needed care and minimizing unnecessary delays in

getting that care.” (Envisioning the National Health Care Quality Report, 2001).

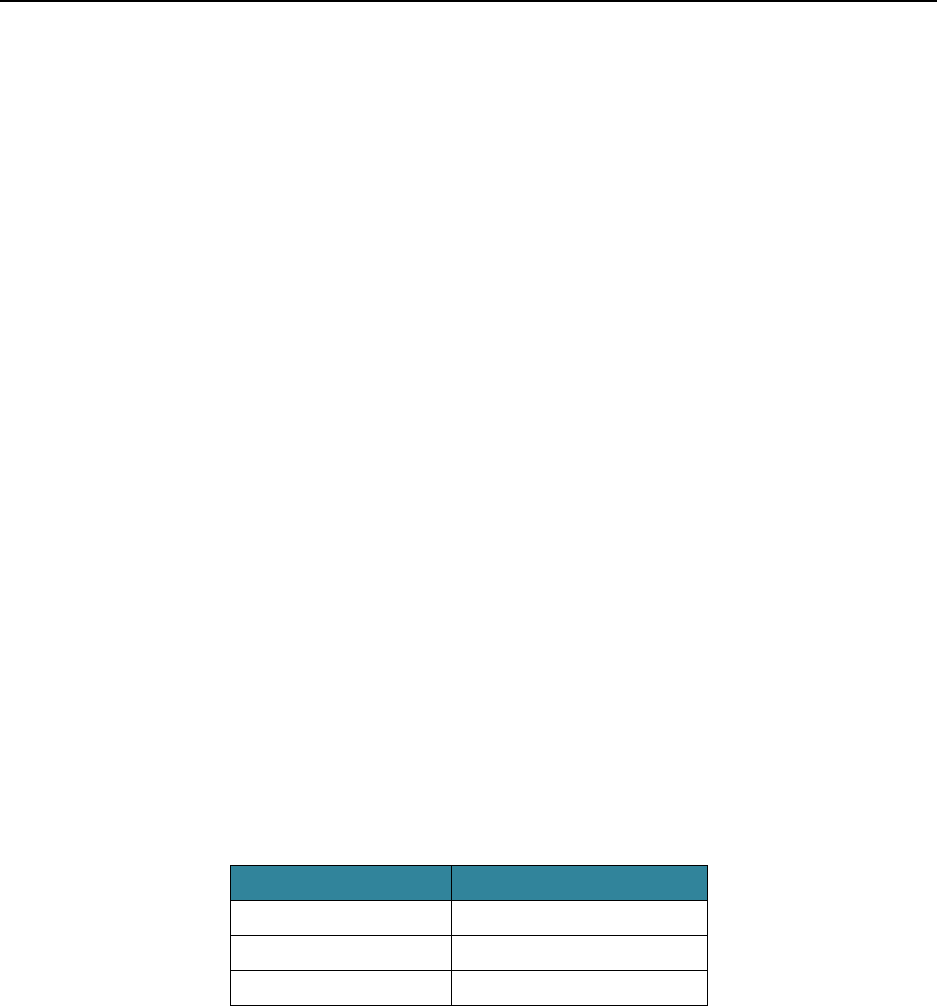

Table 1 outlines the review activities conducted annually that assess quality, access, and timeliness.

Table 1. Review Activities that Assess Quality, Access, and Timeliness

Annual Review Activities that Assess Quality, Access, and Timeliness

Systems Performance Review

Quality

Access

Timeliness

Standard 1 - Systematic Process of Quality Assessment and Improvement

√

Standard 2 - Accountability to the Governing Body

√

Standard 3 - Oversight of Delegated Entities

√

Standard 4 - Credentialing and Recredentialing

√

√

√

Standard 5 - Enrollee Rights

√

√

√

Standard 6 - Availability and Accessibility

√

√

Standard 7 - Utilization Review

√

√

√

Standard 8 - Continuity of Care

√

√

√

Standard 9 - Health Education Plan

√

√

Standard 10 - Outreach Plan

√

√

Standard 11 - Fraud and Abuse

√

√

Value Based Purchasing

Quality

Access

Timeliness

Adolescent Well Care

√

√

√

Ambulatory Care Services for SSI Adults Ages 21–64 Years

√

√

Ambulatory Care Services for SSI Children Ages 0–20 Years

√

√

Cervical Cancer Screening for Women Ages 21–64 Years

√

√

Childhood Immunization Status (Combo 3)

√

√

Eye Exams for Diabetics

√

√

Immunizations for Adolescents

√

√

2014 Annual Technical Report

Delmarva Foundation

v

Value Based Purchasing

Quality

Access

Timeliness

Lead Screenings for Children Ages 12–23 Months

√

√

Postpartum Care

√

√

√

Well-Child Visits for Children Ages 3 – 6 Years

√

√

√

Performance Improvement Project

Quality

Access

Timeliness

Adolescent Well Care PIP

√

√

√

High Blood Pressure PIP

√

√

√

EPSDT Medical Record Review

Quality

Access

Timeliness

Health and Developmental History

√

√

Comprehensive physical examination

√

√

Laboratory tests/at-risk screenings

√

√

Immunizations

√

√

Health education and anticipatory guidance

√

√

Encounter Data Validation

Quality

Access

Timeliness

Inpatient, Outpatient, Office Visit Medical Record Review

√

HEDIS

®

Quality

Access

Timeliness

Childhood Immunization Status

√

√

Immunizations for Adolescents

√

√

Appropriate Treatment for Children with Upper Respiratory Infection

√

Appropriate Testing for Children with Pharyngitis

√

Breast Cancer Screening

√

√

Cervical Cancer Screening

√

√

Chlamydia Screening in Women

√

√

Comprehensive Diabetes Care

√

√

Use of Appropriate Medications for People with Asthma

√

Use of Imaging Studies for Low Back Pain

√

Avoidance of Antibiotic Treatment in Adults with Acute Bronchitis

√

Adult BMI Assessment

√

√

Controlling High Blood Pressure

√

√

Annual Monitoring for Patients on Persistent Medications

√

√

Disease-Modifying Anti-Rheumatic Drug Therapy for Rheumatoid Arthritis

√

Medication Management for People with Asthma

√

Adults’ Access to Preventive/Ambulatory Health Services

√

√

Children and Adolescents’ Access to Primary Care Practitioners

√

√

Prenatal and Postpartum Care

√

√

Call Answer Timeliness

√

√

2014 Annual Technical Report

Delmarva Foundation

vi

HEDIS

Quality

Access

Timeliness

Initiation and Engagement of Alcohol and Other Drug Dependence

Treatment

√

√

Frequency of Ongoing Prenatal Care

√

√

√

Well-Child Visits in the First 15 Months of Life

√

√

√

Well-Child Visits in the 3

rd

, 4

th

, 5

th

and 6

th

Years of Life

√

√

√

Adolescent Well-Care Visits

√

√

√

Ambulatory Care

√

Identification of Alcohol and Other Drug Services

√

√

Weight Assessment and Counseling for Nutrition and Physical Activity for

Children/Adolescents

√

√

√

Use of Sprirometry Testing in the Assessment and Diagnosis of COPD

√

√

Pharmacotherapy Management of COPD Exacerbation

√

√

Asthma Medication Ratio

√

Persistence of Beta-Blocker Treatment After a Heart Attack

√

√

CAHPS

®

Quality

Access

Timeliness

Getting Needed Care

√

Getting Care Quickly

√

How Well Doctors Communicate

√

Customer Service

√

√

Shared Decision Making

√

Health Promotion and Education

√

Coordination of Care

√

Access to Prescription Medication*

√

Access to Specialized Services*

√

Family Centered Care: Personal Doctor Who Knows Your Child*

√

Family Centered Care: Getting Needed Information*

√

Coordination of Care for Children with Chronic Conditions*

√

*Additional Composite Measures for Children with Chronic Conditions

Recommendations and Corrective Action Plans for MCOs Prior Year Review Activities

Systems Performance Review

Although the Maryland (MD) MCO Aggregate rate was 99% in CY 2012, MCOs were required to submit

systems performance review Corrective Action Plans (CAPs) in areas where opportunities for improvement

were identified or in areas where non-conformance with federal and contractual operational systems were

noted.

2014 Annual Technical Report

Delmarva Foundation

vii

The following CAPs were required from the MCOs in the last review period (January 1, 2012 – December 31,

2012):

ACC provided evidence of compliance with preauthorization determination.

ACC provided evidence of compliance with adverse determination notification time frames including the

process for reporting compliance with notification time frames.

ACC revised the Utilization Management Timeliness Audit Policy to incorporate the process for

monitoring and reporting compliance with State-required notification time frames.

JMS provided evidence of meeting time frames set forth in the MCO’s policies regarding recredentialing

decision date requirements.

PPMCO revised the Delegation Policy to ensure that it was in compliance with committee review and

approval of delegate complaint, grievance, and appeal reports on a quarterly basis.

PPMCO provided evidence of the appropriate committee’s review and approval of the annual Utilization

Management Program (UMP) and Utilization Management (UM) criteria for each entity that has been

delegated UM, including Block Vision.

PPMCO provided evidence of meeting time frames set forth in the MCO’s policies regarding

recredentialing decision date requirements in all records reviewed.

PPMCO resolved inconsistency between the Clinical Review Criteria Policy and the UMP as it pertains to

the responsibility for the development of internal criteria.

PPMCO demonstrated compliance with determination and notification time frames for all

preauthorization requests consistent with State regulation or MCO standards if the latter are more

stringent than State regulation.

PPMCO demonstrated that it consistently includes all required components in its adverse determination

letters.

UHC demonstrated that it provides ongoing monitoring of vendor CAPs specific to the MCO, with

documentation to support progress and resolution or recommendation for termination.

UHC clarified in the Delegation Manual which committee is responsible for review and approval of

delegate quarterly complaints and grievances reports.

UHC provided evidence that the appropriate committee reviews and approves quarterly complaint and

grievance reports on a quarterly basis.

UHC provided clearer documentation of committee review and approval of delegate reports to identify

the time period being reviewed.

UHC provided evidence of compliance with the 95% threshold for meeting regulatory time frames for

preauthorization determinations and for adverse determination notifications for any service requiring pre-

authorization regardless of which unit conducts the review.

UHC provided documentation to support how compliance is measured and evidence of corrective action

when time frames are not met.

2014 Annual Technical Report

Delmarva Foundation

viii

UHC provided evidence of a CAP to meet the minimum thresholds for compliance consistent with those

established by the State.

UHC provided a CAP to come into compliance with the 100% threshold for meeting regulatory time

frames for resolution of all expedited and routine appeals, including medical, substance abuse (SA), and

pharmacy. Additionally, MCO minimum thresholds for compliance must be consistent with those

established by the State.

UHC provided evidence of the Compliance Committee’s review and approval of administrative and

management procedures, including mandatory compliance plans to prevent fraud and abuse, for each

delegate that the MCO contracts with.

Overall, the MCOs demonstrated a commitment to providing quality and comprehensive health care to

HealthChoice members. Although these CAPs were followed up on in CY 2013, opportunities still remain

primarily in the areas of delegation and utilization management.

Performance Improvement Projects

Multiple recommendations were made to the MCOs as a result of the CY 2012 PIP review activities:

Complete a thorough and annual barrier analysis which will direct where limited resources can be most

effectively used to drive improvement.

Develop system-level interventions, which include educational efforts, changes in policy, targeting of

additional resources, or other organization-wide initiatives. Face-to-face contact is usually most effective.

To improve outcomes, interventions should be systematic (affecting a wide range of members, providers

and the MCO), timely, and effective.

Assess interventions for their effectiveness, and make adjustments where outcomes are unsatisfactory.

Detail the list of interventions (who, what, where, when, how many) to make the intervention

understandable and so that there is enough information to determine if the intervention was effective.

Although these recommendations were addressed by the MCOs in the CY 2012 PIPs, continued

opportunities for improvements remain for MCOs to improve both qualitative and quantitative analyses of

the study populations.

EPSDT Medical Record Review

The result of the EPSDT review demonstrates strong compliance with the timely screening and preventive

care requirements of the HealthChoice/EPSDT Program. The results of the CY 2012 review demonstrated

that improvements were needed in the following areas:

Immunizations - this component continued to decline by two percentage points again this year.

Laboratory Tests/At-Risk Screenings - this component showed a slight increase of one percentage point.

The Laboratory Tests/At-Risk Screenings component represents an area in most need of improvement.

Recommendations for quality improvement continue to be shared with MCOs annually.

2014 Annual Technical Report

Delmarva Foundation

ix

Two MCOs (MPC and PPMCO) required CAPs in CY 2012 for the Laboratory Tests/At-Risk Screenings

component. Although these CAPs were followed up on in CY 2013, continued opportunities were seen in the

area of Laboratory Tests/At-Risk Screenings. Overall review scores demonstrated that the Primary Care

Physicians (PCPs) and MCOs are committed to providing care that is patient focused and prevention

oriented.

Best and Emerging Practice Strategies

The MCOs effectively addressed quality, timeliness, and access to care issues in their respective managed care

populations. The MCOs implemented the following best practice strategies:

ACC has a comprehensive policy and procedure for the identification, referral, assignment of severity and

action taken to address clinical quality of care issues.

ACC has an objective means for scoring provider office site visits. The scoring guideline provides a

threshold for performance so that reviewers are able to determine when a CAP is required.

ACC has a highly integrated approach to care management of its members designed to address their

somatic and behavioral health needs.

JMS utilizes a prompt evaluation and approval schedule of the QA Program which ensures that quality

improvement efforts are effective in order to identify the need for program change. For example, its

BOD reviews and approves the Quality Assurance (QA) Annual Evaluation, QAP Description and QA

Work Plan for the year within the first quarter of the operational year.

JMS provides a very detailed description of any additional information needed for reconsideration in all

adverse determination letters.

JMS health education classes/programs reflect the needs of the population based upon data analysis and

provider recommendations.

MPC has a well-documented process for performing the practice site reviews and what should be

addressed during the reviews.

MPC includes language in all adverse determination letters documenting the rationale for the

determination which is very clear and easy to understand for a layperson. Letters explain in detail the

reason for the determination, any authorization requirements, and any additional information needed for

reconsideration.

MPC consistently performed well above the State performance threshold for both determination and

notification time frames.

MSFC provides a very detailed and easily understandable explanation for the adverse determination as

well as additional information needed for reconsideration.

MSFC provides its members a very comprehensive menu of health education programs and support

groups throughout the community.

2014 Annual Technical Report

Delmarva Foundation

x

MSFC completes a comprehensive analysis of survey results from the Provider Health Education Survey

which supports the MCO in providing programs that are relevant and of value to the MCO population.

PPMCO continues to demonstrate excellent discovery methods for capturing, reporting, and tracking

QOC issues by provider.

PPMCO conducts thorough reviews of all provider applications and reviews 100% of practitioners for

malpractice history, independent of the outcome. Both, deliberations and committee decisions are clearly

documented in the meeting minutes.

UHC has a very engaged Provider Advisory Committee lead by the MCO’s Chief Medical Officer.

Meeting minutes reflect active provider discussion on operational issues that affect both members and

providers.

UHC completed a comprehensive analysis of CAHPS

®

and Provider Satisfaction survey results including

comparing results to goals/benchmarks and identifying barriers, opportunities for improvement, and

related interventions.

2014 Annual Technical Report

Delmarva Foundation

1

Section I

Systems Performance Review

Introduction

As the EQRO, Delmarva Foundation performed an independent annual review of services provided under

each MCO contract in order to ensure that the services provided to the participants meet the standards set

forth in the regulations governing the HealthChoice Program. COMAR 10.09.65 requires that all

HealthChoice MCOs comply with the Systems Performance Review (SPR) standards and all applicable

federal and state laws and regulations. This section describes the findings from the SPR for CY 2013,

conducted in January and February of 2014. All seven MCOs were evaluated during this review period.

The SPRs were conducted at the MCO’s corporate offices and performed by a review team consisting of

health professionals, a nurse practitioner, and two masters prepared reviewers. The team has combined

experience of more than 45 years in managed care and quality improvement systems, 33 years of which are

specific to the HealthChoice program.

Purpose

The purpose of the SPR is to provide an annual assessment of the structure, process, and outcome of each

MCO’s internal quality assurance programs. Through the systems review, the team is able to identify, validate,

quantify, and monitor problem areas. The team completed the reviews and provided feedback to the Division

of HealthChoice Quality Assurance (DHQA) and each MCO with the goal of improving the care provided to

HealthChoice participants.

Methodology

For CY 2013, COMAR 10.09.65.03 required that all HealthChoice MCOs comply with the SPR standards

established by the Department and all applicable federal and state laws and regulations.

The following eleven performance standards were included in the CY 2013 review cycle:

Systematic Process of Quality Assessment*

Accountability to the Governing Body

Oversight of Delegated Entities

Credentialing and Recredentialing

Enrollee Rights

Availability and Accessibility

2014 Annual Technical Report

Delmarva Foundation

2

Utilization Review (UR)

Continuity of Care

Health Education*

Outreach*

Fraud and Abuse

*Note: These standards were exempt from the CY 2013 review cycle for all MCOs except for RHMD, as this was the MCO’s first SPR.

For CY 2013, all MCOs (except for RHMD) were expected to meet the compliance rate of 100% for all

standards. RHMD’s compliance rate was set at 80% for its first SPR. The MCOs were required to submit a

CAP for any standard that did not meet the minimum compliance rate.

In September 2013, Delmarva provided the MCOs with a “Medicaid Managed Care Organization Systems

Performance Review Orientation Manual” for Calendar Year 2013 and invited the MCOs to direct any

questions or issues requiring clarification to specific Delmarva and DHQA staff. The manual included the

following information:

Overview of External Quality Review Activities

CY 2013 Review Timeline

External Quality Review Contact Persons

Pre-site Visit Overview and Survey

Pre-site SPR Document List

Systems Performance Review Standards, including CY 2013 changes

System Performance Standards and Guidelines

Prior to the on-site review, the MCOs were required to submit a completed pre-site survey form and provide

documentation for various processes such as quality and UM, delegation, credentialing, enrollee rights,

continuity of care, outreach, and fraud and abuse policies. The documents provided were reviewed by

Delmarva staff prior to the on-site visit.

During the on-site reviews in January and February of 2014, the team conducted structured interviews with

key MCO staff and reviewed all relevant documentation needed to assess the standards. At the conclusion,

exit conferences were held with the MCOs. The purpose of the conferences was to provide the MCOs with

preliminary findings, based on interviews and all documentation reviewed. Notification was also provided

during the exit conferences that the MCOs would receive a follow-up letter describing potential issues that

could be addressed by supplemental documents, if available. The MCOs were given 10 business days from

receipt of the follow-up letter to submit any additional information to Delmarva; documents received were

subsequently reviewed against the standard(s) to which they related.

2014 Annual Technical Report

Delmarva Foundation

3

After completing the on-site review, Delmarva documented its findings for each standard by element and

component. The level of compliance for each element and component was rated with a review determination

of met, partially met, or unmet, as follows:

Met

100%

Partially Met

50%

Unmet

0%

Each element or component of a standard was of equal weight. A CAP was required for each performance

standard that did not meet the minimum required compliance rate, as defined for the CY 2013 review.

If an MCO chose to have standards in their policies and procedures that were higher than what was required

by DHMH, the MCO was held accountable to the standards which were outlined in their policies and

procedures during the SPR.

The Department had the discretion to change a review finding to “Unmet” based on the fact that it has been

found “Partially Met” for more than one consecutive year.

Preliminary results of the SPR were compiled and submitted to DHMH for review. Upon the Department’s

approval, the MCOs received a report containing individual review findings. After receiving the preliminary

reports, the MCOs were given 45 calendar days to respond to Delmarva with required CAPs. The MCOs could

have also responded to any other issues contained in the report at its discretion within this same time frame,

and/or requested a consultation with DHMH and Delmarva to clarify issues or ask for assistance in preparing

a CAP.

Corrective Action Plan Process

Each year the CAP process is discussed during the annual review meeting. This process requires that each

MCO must submit a CAP which details the actions to be taken to correct any deficiencies identified during

the SPR. CAPs must be submitted within 45 calendar days of receipt of the preliminary report. CAPs are

reviewed by Delmarva and determined to be adequate only if they address the following required elements

and components:

Action item(s) to address each required element or component

Methodology for evaluating the effectiveness of actions taken

Time frame for each action item, including plans for evaluation

Responsible party for each action item

2014 Annual Technical Report

Delmarva Foundation

4

In the event that a CAP is deemed unacceptable, Delmarva Foundation will provide technical assistance to

the MCO until an acceptable CAP is submitted. Three MCOs were required to submit CAPs for the CY 2013

SPR. All CAPs were submitted, reviewed, and found to adequately address the standard in which the

deficiencies occurred.

Delmarva reviewed any additional materials submitted by the MCO, made appropriate revisions to the

MCO’s final report, and submitted the report to the DHMH for review and approval. The Final MCO

Annual System Performance Review Reports were mailed to the MCOs.

Corrective Action Plan Review

CAPs related to the SPR can be directly linked to specific components or standards. The annual SPR for CY

2014 will determine whether the CAPs from the CY 2013 review were implemented and effective. In order to

make this determination, Delmarva Foundation will evaluate all data collected or trended by the MCO

through the monitoring mechanism established in the CAP. In the event that an MCO has not implemented

or followed through with the tasks identified in the CAP, DHMH will be notified for further action.

Findings

The HealthChoice MCO annual SPR consists of 8 to 11 standards, depending on the MCO. The compliance

threshold established by DHMH for all standards for CY 2013 is 100% for all MCOs, except for RHMD for

which the compliance threshold is set at 80% for its first SPR.

All seven HealthChoice MCOs participated in the SPR. In areas where deficiencies were noted, the MCOs

were provided recommendations that, if implemented, should improve their performance for future reviews.

If the MCO’s score was below the minimum threshold, a CAP was required. Four MCOs (ACC, JMS, MPC,

and MSFC) received perfect scores in all standards. Three MCOs (PPMCO, RHMD, and UHC) were

required to submit CAPs for CY 2013. All CAPs were submitted, reviewed, and found to adequately address

the standard in which the deficiencies occurred.

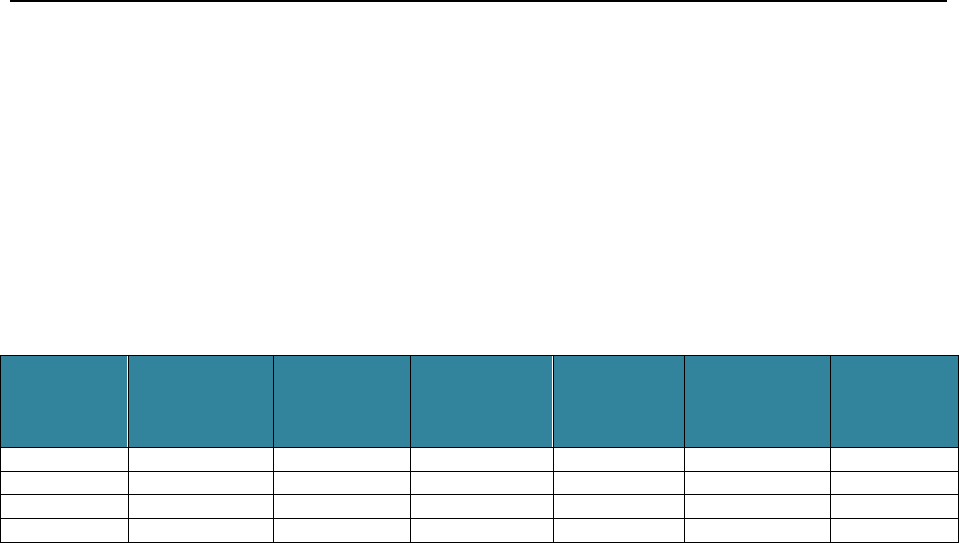

Table 2 provides for a comparison of SPR results across MCOs and the MD MCO Compliance for the CY

2013 review.

2014 Annual Technical Report

Delmarva Foundation

5

Table 2. CY 2013 MCO Compliance Rates

Standard

Description

Elements

Reviewed

MD MCO

Compliance

Rate

ACC

JMS

MPC

MSFC

PPMCO

RHMD**

UHC

1

Systematic Process

33

100%

Exempt

Exempt

Exempt

Exempt

Exempt

100%

Exempt

2

Governing Body

10

100%

100%

100%

100%

100%

100%

100%

100%

3

Oversight of Delegated

Entities

7

83%*

100%

100%

100%

100%

100%

36%*

71%*

4

Credentialing

38

98%*

100%

100%

100%

100%

100%

98%*

100%

5

Enrollee Rights

21

96%*

100%

100%

100%

100%

90%*

94%*

90%*

6

Availability and Access

10

96%*

100%

100%

100%

100%

95%*

80%*

100%

7

Utilization Review

24

90%*

100%

100%

100%

100%

80%*

67%*

85%*

8

Continuity of Care

4

100%

100%

100%

100%

100%

100%

100%

100%

9

Health Education Plan

12

88%*

Exempt

Exempt

Exempt

Exempt

Exempt

88%*

Exempt

10

Outreach Plan

14

93%*

Exempt

Exempt

Exempt

Exempt

Exempt

93%*

Exempt

11

Fraud and Abuse

19

98%*

100%

100%

100%

100%

100%

89%*

100%

*Denotes that the minimum compliance rate of 100% was unmet.

**RHMD’s minimum compliance threshold is set at 80%, as this was the MCO’s first SPR.

2014 Annual Technical Report

Delmarva Foundation

6

For each standard assessed for CY 2013, the following section describes the requirements reviewed; the

results, including the MD MCO compliance rate; the overall MCO findings; the individual MCO

opportunities for improvement and CAP requirements, if applicable; and follow up, if required.

STANDARD 1: Systematic Process of Quality Assessment/Improvement

Requirements: The Quality Assurance Program (QAP) objectively and systematically monitors/evaluates the

quality of care (QOC) and services to participants. Through QOC studies and related activities, the MCO pursues

opportunities for improvement on an ongoing basis. The QAP studies monitor QOC against clinical practice

guidelines which are based on reasonable evidence based practices. The QAP must have written guidelines for its

QOC studies and related activities that require the analysis of clinical and related services. The QAP must include

written procedures for taking appropriate corrective action whenever inappropriate or substandard services are

furnished. The QAP must have written guidelines for the assessment of the corrective actions. The QAP

incorporates written guidelines for evaluation of the continuity and effectiveness of the QAP. A comprehensive

annual written report on the QAP must be completed, reviewed, and approved by the MCO governing body. The

QAP must contain an organizational chart that includes all positions required to facilitate the QAP.

Results:

All MCOs (except for RHMD) were exempt from this standard. This standard was exempt as each MCO has

received compliance ratings of 100% for the past three consecutive years.

RHMD met the minimum compliance threshold for this standard.

Findings: This was RHMD’s first review of their QAP. It was found to be comprehensive in scope and to

appropriately monitor and evaluate the quality of care and service to members using meaningful and relevant

performance measures. Clinical care standards and/or practice guidelines are in place which the MCOs monitor

performance against annually, and clinicians monitor and evaluate quality through review of individual cases where

there are questions about care. Additionally, there is evidence of development, implementation, and monitoring of

corrective actions.

MCO Opportunity/CAP Required

No CAPs were required.

Follow-up: No follow-up is required.

2014 Annual Technical Report

Delmarva Foundation

7

STANDARD 2: Accountability to the Governing Body

Requirements: The governing body of the MCO is the Board of Directors or, where the Board’s participation with

the quality improvement issues is not direct; a committee of the MCO’s senior management is designated. The

governing body is responsible for monitoring, evaluating, and making improvements to care. There must be

documentation that the governing body has oversight of the QAP. The governing body must approve the overall

QAP and an annual QAP. The governing body formally designates an accountable entity or entities within the

organization to provide oversight of quality assurance, or has formally decided to provide oversight as a committee.

The governing body must routinely receive written reports on the QAP that describe actions taken, progress in

meeting quality objectives, and improvements made. The governing body takes action when appropriate and directs

that the operational QAP be modified on an ongoing basis to accommodate review of findings and issues of concern

within the MCO. The governing body is active in credentialing, recredentialing, and utilization review activities.

Results: The overall MD MCO Compliance Rate was 100% for CY 2013.

Findings: Overall, MCOs continue to have appropriate oversight by their governing boards. Evidence was provided

of the oversight provided by the governing body, along with ongoing feedback and direction of quality improvement

activities and operational activities of the MCO.

MCO Opportunity/CAP Required

No CAPs were required.

Follow-up: No follow-up is required.

2014 Annual Technical Report

Delmarva Foundation

8

STANDARD 3: Oversight of Delegated Entities

Requirements: The MCO remains accountable for all functions, even if certain functions are delegated to other

entities. There must be a written description of the delegated activities, the delegate's accountability for these

activities, and the frequency of reporting to the MCO. The MCO has written procedures for monitoring and

evaluating the implementation of the delegated functions and for verifying the quality of care being provided. The

MCO must also provide evidence of continuous and ongoing evaluation of delegated activities.

Results:

The overall MD MCO Compliance Rate was 83% for CY 2013.

ACC, JAI, MPC, MSFC, and PPMCO met the minimum compliance threshold for this standard.

RHMD and UHC were required to submit CAPs.

Findings: MCOs continue to demonstrate opportunities for improvement in this standard regarding delegation

policies and procedures and in the monitoring and evaluation of delegated functions.

MCO Opportunity/CAP Required

RHMD Opportunities/CAPs:

Element 3.1 – There is a written description of the delegated activities, the delegate’s accountability for these

activities, and the frequency of reporting to the MCO.

RHMD received a finding of partially met because the delegated agreements provided a detailed listing of specific

delegated claims processing activities and procedures; however, no specific performance measures or reporting

requirements were identified. Additionally, formalized responsibilities, which had been delegated to the vendor and

clearly outlined in amendments, were not found for functions such as complaints, grievances, and appeals.

In order to receive a finding of met in the CY 2014 SPR, RHMD must ensure that all delegation agreements

accurately reflect responsibility for specific delegated activities. Additionally, specific reporting requirements and

performance measures need to be included in all delegation agreements.

Component 3.3b – There is evidence of continuous and ongoing evaluation of delegated activities, including

quarterly review and approval of reports from the delegates that are produced at least quarterly regarding

complaints, grievances, and appeals, where applicable.

RHMD received a finding of unmet because there was no evidence of Quality Imporvement Committee (QIC)

quarterly review and approval of two delegated vendors’ quarterly complaint, grievance, and appeal reports for the

first, second, or third quarter of 2013. The MCO did not commence operations until February of 2013; therefore,

there were no delegated activities for the fourth quarter of 2012.

In order to receive a finding of met in the CY 2014 SPR, RHMD must provide evidence of formal review and

approval of delegate quarterly complaint, grievance, and appeal reports on a quarterly basis by the appropriate

committee designated in the MCO's policy.

2014 Annual Technical Report

Delmarva Foundation

9

Component 3.3c - There is evidence of continuous and ongoing evaluation of delegated activities, including

review and approval of claims payment activities, where applicable.

RHMD received a finding of unmet because there was no evidence of the QIC’s review and approval of three

delegated vendors’ claims activities reports since the MCO’s commencement of operations in mid-February 2013.

In order to receive a finding of met in the CY 2014 SPR, RHMD must provide evidence of formal review and

approval of delegate claims activities reports by the appropriate committee designated in the MCO's policy and

according to the stated frequency.

Component 3.3d - There is evidence of continuous and ongoing evaluation of delegated activities, including

review and approval of the delegated entities’ UM plan, which must include evidence of review and approval

of UM criteria by the delegated entity, where applicable.

RHMD received a finding of unmet because there was no evidence of QIC review and approval of the annual UMP

and UM criteria from two of the delegated vendors in 2013.

In order to receive a finding of met in the CY 2014 SPR, RHMD must provide evidence of formal review and

approval of each delegate's annual UMP and UM criteria by the appropriate committee designated in the MCO's

policy.

Component 3.3e - There is evidence of continuous and ongoing evaluation of delegated activities, including

review and approval of over and underutilization reports, where applicable.

RHMD received a finding of unmet because there was no evidence of QIC review and approval for two delegated

vendors over and underutilization reports since the MCO’s commencement of operations in mid-February 2013.

In order to receive a finding of met in the CY 2014 SPR, RHMD must provide evidence of formal review and

approval of each delegate's over/under utilization report(s) by the appropriate committee designated in the MCO's

policy and according to the stated frequency.

UHC Opportunities/CAPs:

Component 3.3a – There is evidence of continuous and ongoing evaluation of delegated activities, including

oversight of delegated entities’ performance to ensure the quality of the care and/or service provided, through

the review of regular reports, annual reviews, site visits, etc.

UHC received a finding of unmet because this was the second year that there were opportunities for improvement

identified in this area of review. As a result of the CY 2012 SPR finding, UHC was required to submit a CAP to

provide evidence of ongoing oversight and monitoring of delegated entities. The CAP was not fully implemented

and continuing opportunities for improvement exist. According to the Director of Marketing, routine monitoring of

delegated entities occurred informally through ad hoc meetings convened in response to identified issues. There was

no documentation of these meetings. There was no evidence of review of delegated vendors’ annual audit findings.

2014 Annual Technical Report

Delmarva Foundation

10

Evidence was provided supporting an annual credentialing audit; however, there was no evidence of a claims audit,

which is also a delegated activity.

In order to receive a finding of met in the CY 2014 SPR, UHC must provide ongoing evidence of routine monitoring

and oversight of each delegated entity that includes documented review of annual audit findings of delegated

activities and monitoring of any CAPs.

Component 3.3e - There is evidence of continuous and ongoing evaluation of delegated activities, including

review and approval of over and underutilization reports, where applicable.

UHC received a finding of unmet because the QMC did not review over and underutilization comparisons on an

annual basis.

In order to receive a finding of met in the CY 2014 SPR, UHC must provide evidence of review/approval of any

UM delegated entity’s over/under utilization report(s) by the appropriate committee at intervals consistent with the

MCO's policy.

Follow-up:

RHMD and UHC were required to submit CAPs for the above elements/components. Delmarva Foundation

reviewed and approved the submissions.

The approved CAPs will be reviewed during the CY 2014 SPR.

2014 Annual Technical Report

Delmarva Foundation

11

STANDARD 4: Credentialing and Recredentialing

Requirements: The QAP must contain all required provisions to determine whether physicians and other health

care professionals licensed by the State and under contract with the MCO are qualified to perform their services. The

MCO must have written policies and procedures for the credentialing process that govern the organization’s

credentialing and recredentialing. There is documentation that the MCO has the right to approve new providers and

sites and to terminate or suspend individual providers. The MCO may delegate credentialing/recredentialing

activities with a written description of the delegated activities, a description of the delegate’s accountability for

designated activities, and evidence that the delegate accomplished the credentialing activities. The credentialing

process must be ongoing and current. There must be evidence that the MCO requests information from recognized

monitoring organizations about the practitioner. The credentialing application must include information regarding

the use of illegal drugs, a history of loss of license and loss or limitation of privileges or disciplinary activity, and an

attestation to the correctness and completeness of the application. There must be evidence of an initial visit to each

potential PCP’s office with documentation of a review of the site and medical record keeping practices to ensure

compliance with the American’s with Disabilities Act and the MCO’s standards.

There must be evidence that recredentialing is performed at least every three years and includes a review of enrollee

complaints, results of quality reviews, hospital privileges, current licensure, and office site compliance with

Americans with Disabilities Act of 1990 (ADA) standards, if applicable.

Results:

The overall MD MCO Compliance Rate was 99% for CY 2013.

All MCOs met the minimum compliance threshold for this standard.

RHMD received a compliance rate of 98%, which exceeds its minimum compliance threshold of 80% for its

first review.

Findings: Overall, MCOs have appropriate policies and procedures in place to determine whether physicians and

other health care professionals, licensed by the State and under contract to the MCO, are qualified to perform their

services. Evidence in credentialing and recredentialing records demonstrated that those policies and procedures are

functioning effectively. There were issues identified with the recredentialing process over the past year which

represented the slight decline in the overall MCO compliance rate.

MCO Opportunity/CAP Required

No CAPs were required.

Follow-up: No follow-up is required.

2014 Annual Technical Report

Delmarva Foundation

12

STANDARD 5: Enrollee Rights

Requirements: The organization demonstrates a commitment to treating participants in a manner that acknowledges

their rights and responsibilities. The MCO must have a system linked to the QAP for resolving participants’

grievances. This system must meet all requirements in COMAR 10.09.71.02 and 10.09.71.04. Enrollee information

must be written to be readable and easily understood. This information must be available in the prevalent non-

English languages identified by the Department. The MCO must act to ensure that the confidentiality of specified

patient information and records are protected. The MCO must have written policies regarding the appropriate

treatment of minors. The MCO must, as a result of the enrollee satisfaction surveys, identify and investigate sources

of enrollee dissatisfaction, implement steps to follow-up on the findings, inform practitioners and providers of

assessment results, and reevaluate the effectiveness of the implementation steps at least quarterly. The MCO must

have systems in place to assure that new participants receive required information within established time frames.

Results:

The overall MD MCO Compliance Rate was 96% for CY 2013.

ACC, JAI, MPC, and MSFC met the minimum compliance threshold for this standard.

PPMCO, RHMD, and UHC were required to submit CAPs.

Findings: Overall, MCOs have policies and procedures in place that demonstrate their commitment to treating

members in a manner that acknowledges their rights and responsibilities. Evidence of enrollee information was

reviewed and found to be easily understood and written in Spanish as required by the Department.

Additionally, all MCOs provided evidence of their complaint, grievance, and appeals processes. However,

opportunities for improvement did exist regarding policies and procedures, complaints/grievances, and satisfaction

surveys.

MCO Opportunity/CAP Required

PPMCO Opportunities/CAPs:

Component 5.1d – The grievance policy and procedure describes the process for aggregation and analysis of

grievance data and the use of the data for QI. There is documented evidence that this process is in place and

is functioning.

PPMCO received a finding of partially met because its Member Complaint/Grievance Policy did not reflect the

correct committee reporting structure.

In order to receive a finding of met in the CY 2014 SPR, PPMCO must revise the Member Complaint/Grievance

Policy to reflect the correct reporting structure.

Component 5.1f - There is complete documentation of the substance of the grievances and steps

taken.

2014 Annual Technical Report

Delmarva Foundation

13

PPMCO received a finding of partially met because after a review of 35 complaint/grievance records, it was found

that the documentation of the substance of the complaint/grievance in the electronic system, along with the letters to

members regarding the complaint/grievance and its resolution, was not complete in several records. Additionally,

the documentation in the complaint/grievance records did not match up to the dates noted in the system: start dates,

completion dates, dates on customer service call notes, and response letter dates.

In order to receive a finding of met in the CY 2014 SPR, PPMCO must provide complete and clear documentation

of the substance of the grievances and steps taken in each record.

Component 5.1g – The MCO adheres to the time frames set forth in its policies and procedures for resolving

grievances.

PPMCO received a finding of unmet because a review of 35 complaint/grievance records found that the current

electronic system did not clearly track the dates of resolution activity for all records.

In order to receive a finding of met in the CY 2014 SPR, PPMCO must adhere to the time frames set forth in its

policies and procedures for resolving grievances in all records.

RHMD Opportunities/CAPs:

Component 5.6a - Policies and procedures are in place that address the content of new enrollee packets of

information and specify the time frames for sending such information to the enrollee.

RHMD received a finding of partially met because the MCO does not have a formal written policy and procedure

that includes the content of new enrollee packets and the regulatory time frames for mailing such information to new

participants. Currently, welcome packet fulfillment reports are reviewed daily, along with the use of Health Risk

Assessments and Welcome Calls to confirm receipt of new enrollee packets.

In order to receive a finding of met in the CY 2014 SPR, RHMD must develop a policy and procedure that includes

the content of new enrollee packets and the regulatory time frames for mailing such information to new participants.

UHC Opportunities/CAPs:

Component 5.1g – The MCO adheres to the time frames set forth in its policies and procedures for resolving

grievances.

UHC received a finding of unmet because grievance records demonstrated that resolution letters were absent from

almost all case records due to staffing changes and training issues during 2013. Therefore, the reviewer was unable

to determine whether or not resolutions met the required time frames. UHC proactively developed a CAP prior to

the review to rectify the noncompliant situation, including a new tracking grid, implementation of weekly and

quarterly audits, and secured electronic record keeping. These activities will begin in February 2014.

In order to receive a finding of met in the CY 2014 SPR, UHC must adhere to the time frames set forth in the

MCO’s policies and procedures for resolving grievances.

2014 Annual Technical Report

Delmarva Foundation

14

Component 5.5c - As a result of the enrollee satisfaction surveys, the MCO informs practitioners and

providers of assessment results.

UHC received a finding of unmet because the MCO did not notify providers of the annual satisfaction survey

results. UHC would normally publish the results and analysis of the 2013 CAHPS® survey (measuring data from

CY 2012) in the fourth quarter 2013 provider newsletter.

In order to receive a finding of met in the CY 2014 SPR, UHC must inform practitioners and providers of

assessment results.

Follow-up:

PPMCO, RHMD, and UHC were required to submit CAPs for the above elements/components. Delmarva

Foundation reviewed and approved the submissions.

The approved CAPs will be reviewed for compliance during the CY 2014 SPR.

2014 Annual Technical Report

Delmarva Foundation

15

STANDARD 6: Availability and Accessibility

Requirements: The MCO must have established measurable standards for access and availability. The MCO must

have a process in place to assure MCO service, referrals to other health service providers, and accessibility and

availability of health care services. The MCO must have a list of providers that are currently accepting new

participants. The MCO must implement policies and procedures to assure that there is a system in place for notifying

participants of due dates for wellness services.

Results:

The overall MD MCO Compliance Rate was 96% for CY 2013.

ACC, JAI, MPC, MSFC, RHMD, and UHC met the minimum compliance threshold for this standard.

PPMCO was required to submit a CAP.

Findings: Overall, MCOs have established appropriate standards for ensuring access to care and have fully

implemented a system to monitor performance against these standards. All MCOs have current provider directories

that list providers that are currently accepting new participants along with websites and helplines that are easily

accessible to members as well. Each MCO has an effective system in place for notifying members of wellness

services. However, opportunities exist regarding consistency in policies and procedures and corrective action

planning.

MCO Opportunity/CAP Required

Component 6.1c - The MCO has established policies and procedures for the operations of its

customer/enrollee services and has developed standards/indicators to monitor, measure, and report on its

performance.

PPMCO received a finding of partially met because the MCO’s Access, Availability and Performance Standards

Policy cited performance standards that were inconsistent with their call center metrics. Additionally, the policy was

silent as to how to rectify ongoing noncompliance of call center performance.

In order to receive a finding of met in the CY 2014 SPR, PPMCO must revise either the Access, Availability and

Performance Standards Policy or the Call Center Metric goal so that both documents state the same calls answered

within 30 seconds (availability rate for customer service representatives) goal. Currently, the policy states 90% and

the matrix spreadsheet states 85%.

Follow-up:

PPMCO was required to submit a CAP for the above element/component. Delmarva Foundation reviewed and

approved the submission.

The approved CAP will be reviewed for compliance during the CY 2014 SPR.

2014 Annual Technical Report

Delmarva Foundation

16

STANDARD 7: Utilization Review

Requirements: The MCO must have a comprehensive Utilization Management Program, monitored by the

governing body, and designed to evaluate systematically the use of services through the collection and analysis of

data in order to achieve overall improvement. The Utilization Management Plan must specify criteria for Utilization

Review/Management decisions. The written Utilization Management Plan must have mechanisms in place to detect

over utilization and underutilization of services. For MCOs with preauthorization or concurrent review programs,

the MCO must substantiate that: preauthorization, concurrent review, and appeal decisions are made and supervised

by appropriate qualified medical professionals; efforts are made to obtain all necessary information, including

pertinent clinical information, and to consult with the treating physician as appropriate; the reasons for decisions are

clearly documented and available to the enrollee; there are well publicized and readily available appeal mechanisms

for both providers and participants; preauthorization and concurrent review decisions are made in a timely manner

as specified by the State; appeal decisions are made in a timely manner as required by the exigencies of the

situation; and the MCO maintains policies and procedures pertaining to provider appeals as outlined in COMAR

10.09.71.03. Adverse determination letters must include a description of how to file an appeal and all other required

components. The MCO must also have policies, procedures, and reporting mechanisms in place to evaluate the

effects of the Utilization Management Program by using data on enrollee satisfaction, provider satisfaction, or other

appropriate measures.

Results:

The overall MD MCO Compliance Rate was 90% in CY 2013.

ACC, JAI, MPC, and MSFC met the minimum compliance threshold for this standard.

PPMCO, RHMD and UHC were required to submit CAPs.

Findings: Overall, MCOs have strong Utilization Management Plans that describe procedures to evaluate medical

necessity criteria used, information sources, procedures for training and evaluating staff, monitoring of the

timeliness and content of adverse determination notifications, and the processes used to review and approve the

provision of medical services. The MCOs provided evidence that qualified medical personnel supervise pre-

authorization and concurrent review decisions. The MCOs have implemented mechanisms to detect over and

underutilization of services. Overall, policies and procedures are in place for providers and participants to appeal

decisions. However, continued opportunities were present in the areas of monitoring compliance of UR decision.

MCO Opportunity/CAP Required

PPMCO Opportunities/CAPs:

Component 7.3a - Services provided must be reviewed for over and underutilization.

PPMCO received a finding of partially met because the Over and Under Utilization Policy outlines procedures for

monitoring of potential over and underutilization and development of interventions, as indicated. Monitoring is to

occur on a quarterly basis with results reported to the Quality Improvement Work Group (QIWG). Although it is

evident that the UM Close Committee was reviewing utilization trends for some inpatient services, this component

was only partially met as there was no evidence that the UM Close Committee reported results to the QIWG in a

manner consistent with the MCO's policy. Additionally, there was no evidence of follow-up on action items

requiring further investigation of identified trends to assess for over or under utilization.

2014 Annual Technical Report

Delmarva Foundation

17

In order to receive a finding of met in the CY 2014 SPR, PPMCO must provide evidence that the MCO is following

its policies for monitoring and reporting of potential over and underutilization. There must also be evidence of

follow-up on identified action plans requiring further investigation of potential over and underutilization.

Component 7.3b – Utilization review reports must provide the ability to identify problems and take the

appropriate corrective action.

PPMCO received a finding of unmet because there was no evidence that the MCO identified problems of

over/under utilization and implemented corrective action based upon review of QIWG meeting minutes from 2013.

The MCO did provide two examples of BH meeting minutes that primarily focused on the State-required SA

performance improvement project and noted that reports had been presented to the QIWG. Use of a State-required

performance improvement project does not meet the intent of this standard/component.

In order to receive a finding of met in the CY 2014 SPR, PPMCO must provide evidence that the MCO takes

corrective action in response to identified over/under utilization problems as documented in the appropriate

committee meeting minutes.

Component 7.3c - Corrective measures implemented must be monitored.

PPMCO received a finding of unmet because there was no documentation in appropriate committee meeting

minutes that corrective measures to address over/under utilization were monitored in 2013.

In order to receive a finding of met in the CY 2014 SPR, PPMCO must provide evidence that corrective measures

have been implemented to address over/under utilization problems are monitored by the appropriate committee.

Component 7.4c - The reasons for decisions are clearly documented and available to the enrollee.

PPMCO received a finding of partially met because a review of a sample of member adverse determination letters

demonstrated unclear language from the criteria used to make the determination included in the letters. For example,

the letters included the use of standard medical terminology such as "functional plateau" and "decline in speech

intelligibility," terms. These terms could not be easily understood and are inappropriate in a letter to a member.

In order to receive a finding of met in the CY 2014 SPR, PPMCO must document the reasons for decisions in

clearly understandable language for the member.

Component 7.4e - Preauthorization and concurrent review decisions are made in a timely manner as specified

by the State.

In response to the CY 2012 SPR findings, PPMCO was required to develop a CAP to demonstrate consistent

compliance with determination and notification time frames specified by the State. In CY 2013, continued

opportunities for improvement exist; therefore PPMCO received a finding of unmet in that component. The

Inpatient Preauthorizations document identified compliance with turnaround times by month throughout 2013.

Compliance exceeded the 95% threshold with the exception of June, which was slightly below at 94.8%.

2014 Annual Technical Report

Delmarva Foundation

18

In order to receive a finding of met in the CY 2014 SPR, PPMCO must consistently demonstrate compliance at the

95% threshold in response to State-required time frames for preauthorization determinations and adverse

determination notifications for medical, pharmacy, and SA.